Technology, Pregnancy, and Parenthood

A look into gig-workers in childcare industry, social media & pregnancy loss, and AI-enabled parenting

Written on December 3, 2021

I was 20 years old when I bought the book Letter to a Child Never Born by Oriana Fallaci, a late Italian journalist. Halfway through reading, I told myself, one day if I become voluntarily pregnant, I'll write a similar book to my child -- whether they are born or not.

I made that promise to myself, on an early Spring day, in a parked car in a crowded street of Tehran. 14 years later, I became pregnant, on an early Spring day, in the not-at-all-crowded City of Santa Cruz -- and not in a parked car! I didn't write my version of Letter to a Child Never Born. Instead, I gathered all my drained energy to sit down and write this little newsletter. My hope is that this newsletter will survive on Mailchimp/Substack servers and one day my child will see that their mother's love for writing about human rights and technology came before writing a letter to them :)

Join me for the next few minutes to read about technology, pregnancy, and parenthood; gig workers' rights including nannies and domestic workers; abortion and online propaganda; babies' gadgets and cultural differences. I also have a few updates about some of the work we have been doing at my non-profit organization, Taraaz.

Babysitters and their rights in the gig economy!

When we talk about gig workers, often we have a picture of workers in the transportation and delivery industries. However, in her project "Nanny Surveillance State," Di Luong turns our attention to nannies, babysitters, and childcare gig-workers. You can join Di's working group at Mozilla Foundation to research issues such as the use of surveillance and data-driven technologies used to constantly track domestic workers' productivity and health.

+ Read also Julia Ticona's article "Essential and Untrusted," which walks you through racial and gender stereotypes, accessibility issues, and demographical exclusion in nanny-recruiting platforms such as Care.com, Sittercity, Urban Sitter. She writes: "just like ride-hailing apps, online care platforms are management technologies. The difference is that instead of direct 'algorithmic management'—using surveillance and data to automate decision-making and maximize productivity—care platforms use 'actuarial management,' which also includes surveillance, but to classify workers in an attempt to minimize losses for employers. These platforms incentivize workers to expose themselves as much as possible to establish their trustworthiness. The information collected goes into the algorithm, which sorts the workers into categories that are anything but neutral." Julia has a forthcoming book: Left to Our Own Devices.

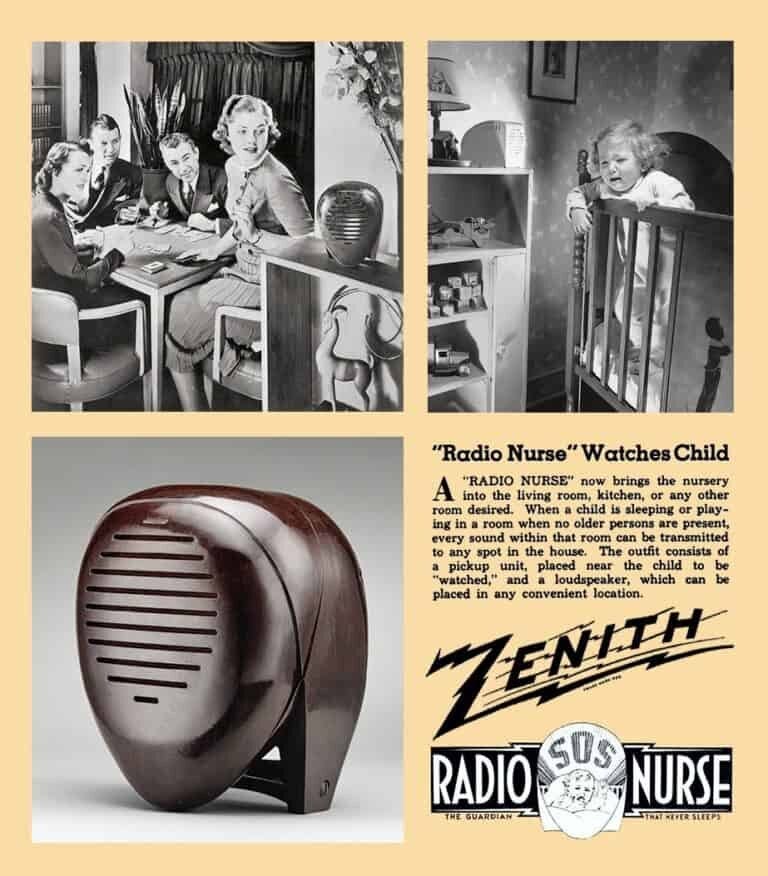

"Mother's Little Helpers: Technology in the American Family." Helper or hassler though 🧐?!

I'm not sure if it was my third-trimester pregnancy symptom or my anger issues or both or none, but a few days ago, at 4 AM, I cut our Philips Hue smart home router's cord, and shelved our Apple HomePods. And to be honest, that was not necessarily because I'm a privacy zealot, like my field expects me to be. Ironically, in direct opposition to how these tools are marketed, my frustration was because of the inconvenience of these "smart" devices! Stacey Higginbotham writes in detail about the unbearable fussiness of the smart home. But it's not just smart lights. There has been enormous research and marketing interest in baby-raising gadgets: deep-learning-based baby's cry detectors, smart pacifiers, blanket-covering-baby's-face detectors, breastmilk nutrition analysis tools, and many many more. Well, I don't know how these tools are compatible with the fact that babies cry differently in different cultures and languages! (Do I see 👀 any fairness in machine learning researchers here?)

All this to say that Hannah Zeavin has a book coming out that "tells the complicated story of American techno-parenting, from The Greatest Generation through millennials." Read more and listen to her talk about Mother’s Little Helpers: Technology in the American Family. I can't wait to read and see if these tools are parents' helpers or hasslers. You might also like to listen to this podcast about emotion detection and raising kids in "an AI world."

Patriarchy, racism, and stigmatizing the poor in digital childcare welfare: from the US to Latin America

Cities around the US have started using algorithmic risk assessment tools to predict "the likelihood that a child in a given situation or location will be maltreated." The score then will be used to help authorities for further investigation or even taking child custody from parents. ACLU published a white paper summarizing the flaws with these systems which includes disproportionately penalizing Black and Indigenous families, lack of family inputs in the development process, and historic racial and socio-economic biases in data that are used to train these predictive tools. You can also listen to Khadijah Abdurahman's talk, "Calculating the Souls of Black Folk: Reflections on Predictive Analytics in the Family Regulation System."

+ Read also this report, by the Why is AI a Feminist Issue project, describing Brazilian and Argentinian governments' collaboration with Microsoft to develop a digital system to predict teenage pregnancy, especially in poor provinces in Latin America. The report raises concerns about the implication of the tool on surveilling and stigmatizing the poor. It also cites a technical audit, concluding that "the results [of the predictive tool] were falsely oversized due to statistical errors in the methodology; the database is biased; it does not take into account the sensitivities of reporting unwanted pregnancy, and therefore data collected is inadequate to make any future prediction."

Social media and LGBTQ persons' pregnancy loss disclosure

Based on a qualitative analysis, in a new paper entitled "LGBTQ Persons' Pregnancy Loss Disclosures to Known Ties on Social Media" the University of Michigan's researchers discuss the barriers to disclosing pregnancy loss by the LGBTQ community on even their private social media network. Some barriers include stigma around the topic, lack of awareness and education about pregnancy loss, privacy concerns, hateful reactions, and the dominant cisgender, heterosexual narrative around pregnancy. The researchers "argue that that social media platforms can better facilitate disclosures about silenced topics by enabling selective disclosure, enabling proxy content moderation, providing education about silenced experiences, and prioritizing such disclosures in news feeds."

Reproductivity and computational propaganda

Read also Women’s Reproductive Rights Computational Propaganda in the United States, by Brandie Nonnecke et. al describing the role of bots in spreading disinformation, harassment, and divisiveness on Twitter during the US election.

+ To my fellow Iranians: I have been reading a book called Conceiving Citizens: Women and the Politics of Motherhood in Iran. Not surprisingly, the book describes how, spanning the nineteenth and twentieth centuries, controlling women's bodies was influenced by the Iranian government's goals around education, employment, political rights, international reputation, religious ideologies. This is a topic that is worthwhile to be researched in the context of social media users' reactions and influence on the government's policies. Let me know if you are interested in working on it together (roya.pakzad@gmail.com).

Sperm Series: I Download an App

This episode of the Longest Shortest Time podcast talks about the use of online platforms in finding sperm donors and its challenges: from trust to racial discrimination.

📝 My Work at Taraaz

It is becoming two years since I have started Taraaz, a research and advocacy nonprofit working at the intersection of tech & human rights. You can subscribe to our newsletter for updates on Taraaz's research, advocacy work, events, and more

But here is also a snapshot of what we have been doing:

💬 Our work on countering harmful speech and Muslims online won a Facebook research award and has a new webpage. Our new paper on the limitations of content moderation in the context of sustained and accumulated harms over time will come out soon. Tune in!

📱Our work with Filterwatch on digital rights & technology sector accountability in Iran used the Ranking Digital Rights Corporate Accountability Index (RDR) methodology to evaluate the human rights commitments of tech companies in Iran. Read and watch it here and tune in for our new paper on the digitization of public spaces and human rights in Iran, coming out in just a few weeks!

📝Next week, Taraaz and the CITRIS Policy Lab's Technology and Human Rights fellows will submit and present their work on two topics: 1) smart city as surveillance city and 2) opportunities and constraints of AI development in Nigeria.

🔊 We joined other human rights and internet freedom organizations to condemn Internet shutdowns in Iran, silencing Palestinians on social media platforms, e-payments discriminatory actions, Spotify's problematic speech recognition technology, and more.

🥉I won third place in Twitter Engineering’s first algorithmic bounty challenge for my experiment, "Gazing at the Mother Tongue," showing a lack of language diversity and representation in Arabic script vs Latin scripts and social media content moderation practices.

🌏 We also worked with groups including Amnesty International, Ethical Resolve, Luminate, Facebook, Business for Social Responsibility (BSR), and The Max Planck Institute for Empirical Aesthetics on other consultancy projects. Subscribe to Taraaz's newsletter and follow us on Twitter and LinkedIn for any potential public updates on these projects.

Low effort, yet honest comment here:

Abolish all technology, if not possible for everyone, for you and your family!

Read Technological Slavery by Dr. Skrbina.

https://archive.org/download/tk-Technological-Slavery/tk-Technological-Slavery.pdf