The origin of the Mechanical Turk and Stalin’s “deepfakes”

From the 13th edition of my newsletter, Humane AI

From the 13th edition of my newsletter, Humane AI

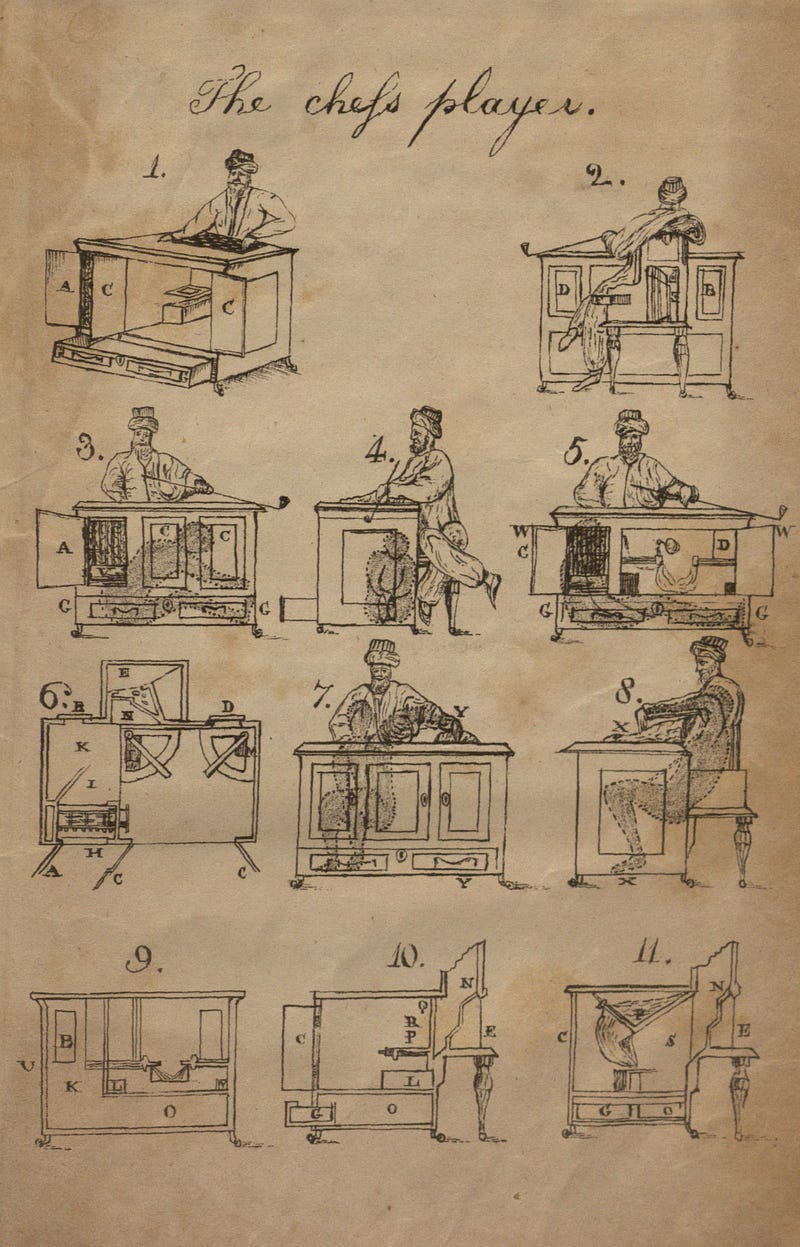

✏ The Mechanical Turk: “Artificial Artifical Intelligence”

In the late 18th century, a Hungarian inventor presented a new invention to the Empress of Austria. It was an “intelligent” machine that came to be known as the Mechanical Turk, capable of playing chess at a high level. Over the years, the automaton would defeat opponents ranging from Napoleon to Benjamin Franklin. It was not until decades later that the secret was revealed: a human operator was hidden inside this supposedly thinking machine.

Two centuries later, are we continuing to hide the role (and, more, importantly the rights) of humans behind our “magical” intelligent machines? For a day’s worth of work on labeling training data, transcribing audio files, and other menial tech tasks, how much do Amazon’s Mechanical Turkers make? Do they have health insurance? Does the US Fair Labor Standards Act apply to them? In this piece, the BBC’s David Lee reports on the Kenyans who work annotating images to be used for training self-driving cars. The Atlantic also reported on Amazon Mechanical Turkers’ work situations: “3.8 million tasks on Mechanical Turk, performed by 2,676 workers, found that those workers earned a median hourly wage of about $2 an hour. Only 4 percent of workers earned more than $7.25 an hour.”

If you are interested in learning more about advocating for crowd workers rights, check out Turker Nation and We Are Dynamo: Overcoming Stalling and Friction in Collective Action for Crowd Workers.

✏Limitation of Computational Fact Checking and Social Media Analysis

Recently I learned about a fact-checking tool called FakerFact. Using Natural Language Processing, based on training on millions of documents, the tool lets you know what kind of article you are reading: is the article written in a way that focuses on facts, or is it written with a manipulative agenda? FakerFact is not the only one: Chequeabot in Argentina, Full Fact in the UK, Botometer, and many more.

Despite the importance of these tools, perhaps the next step is to understand the limitations of these technologies: who sets the standards of whether an article is truthful or not? Who is the targeted audience: people with a low digital literacy level, or a high one? Can the tool take the context of certain news or certain platforms into account? Is there a chance that the tool disproportionately censors voices from marginalized groups? This great report by the Center for Media and Technology looks into some of the limitations of content moderation tools.

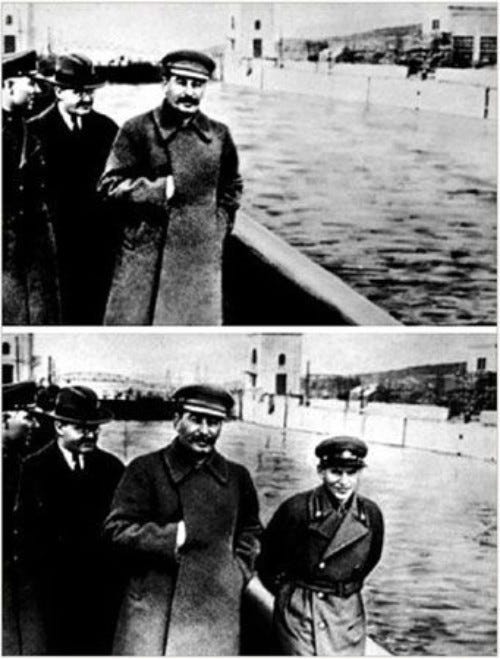

✏ Comrade Stalin, Doctored Images, and Deepfakes

Twelve voices were shouting in anger, and they were all alike. No question, now, what had happened to the faces of the pigs. The creatures outside looked from pig to man, and from man to pig, and from pig to man again; but already it was impossible to say which was which.

These are the very last lines of Animal Farm by George Orwell. I was 11 when I read it in Farsi; too naive to understand the correspondence between the pig Neopolean in the book and Joseph Stalin of the Soviet Union. But those final lines stayed with me until today. This summer, I visited Stalin’s childhood home and museum in Gori, Georgia. While looking at the rows and rows of photos, I thought about the USSR’s censorship and photo manipulation. Were these strategies ultimately successful in changing people’s mind? In hiding reality? Are there any lessons in history that we can apply to today’s concerns around deepfakes?

In addition to the New Yorker article, this great report by WITNESS gives an overview of deepfakes, the technology behind their creation, and a series of recommendations for tech companies, media literacy educators, and policy-makers.

✏ A “Nutrition Label” for Datasets

Recently there have been some excellent discussions among machine learning researchers about improving the transparency and quality of the training data that are fed into machine learning models. MIT’s dataset “Nutrition Label” aims to “create a standard for interrogating datasets that will ultimately drive the creation of better, more inclusive algorithms.” Datasheets for Datasets and Model Cards for Model Reporting are two other great standardization approaches to “identify how a dataset was created, and what characteristics, motivations, and potential skews it represents.”

✏ The Refugee Detectives

In this piece, Atlantic reporter Graeme Wood shows how Germany’s Federal Office for Migration and Refugees has been using facial recognition tools and language identification as a “lie detector” to evaluate asylum seekers’ applications. From a human rights perspective, there are many concerns around the “black box” nature of these tools, error rates for different languages and dialects, the level of human oversight, and privacy concerns. Germany is not the only country currently deploying AI tools to control its border. Canada, UK, and Australia have also begun using similar technologies. “Bots at the Gate: A Human Rights Analysis of Automated Decision Making in Canada’s Immigration and Refugee System” shows how “these initiatives may place highly vulnerable individuals at risk of being subjected to unjust and unlawful processes.”

✏ The Measure and Mismeasure of Fairness: A Critical Review of Fair Machine Learning

In this new working paper, Stanford researchers Sam Corbett-Davies and Sharad Goel argue that the three common approaches toward ML fairness, including anti-classification (i.e. protected features, like race, gender, and their proxies, are not explicitly used to make decisions); classification parity (equal error rates across protected groups) and calibration (conditional on risk estimates, outcomes are independent of protected features) have significant statistical limitations. “Requiring anti classification or classification parity can, perversely, harm the very groups they were designed to protect; and calibration, though generally desirable, provides a little guarantee that decisions are equitable.”

This has been an excerpt from the Humane AI newsletter, run by me, Roya Pakzad. You can subscribe to the newsletter here.